Archive

Fun with Linux on old hardware

Most of the world’s desktop computers run some flavour of Microsoft Windows. Many corporates and enterprises have volume licensing in place that will use a key management server or Active Directory activation to keep their PC’s automatically licensed. When it comes time to dispose of these PC’s, they cannot in most cases be legally sold with Windows still installed and with a KMS/AD activation system in place, the PC’s licensing will expire at most about 6 months after disposal since it cannot contact the internal activation systems anymore.

The PC’s can either be disposed of after being software wiped and leaving whoever purchases it to handle installing their own operating system – or one could be charitable and install a Linux distro on the PC so that it’s ready for use out the box so to speak. This is what I have been doing at my school as I dispose of old computers, as even if the buyer intends to install Windows again, with Linux pre-installed at least the computer is usable for many tasks immediately.

In most cases, Linux Just Works™ and the computer is ready after about an hour or so of work. Whilst I am not a fan in general of Ubuntu and its family, I install Kubuntu on these PC’s due to KDE’s approximation of Windows, as well as the OEM install mode that makes first setup for the end user much more like a Windows out of box experience. Why more Linux distros don’t have this OEM install mode, I don’t know.

However, sometimes you hit issues thanks to hardware – more often than not it’s due to having an Nvidia graphics card of some sort. Most AMD and Intel graphics solutions will just work out of the box thanks to open source drivers in Mesa and you don’t have to do much of anything else. Nvidia cards though are a different story and this is where I hit a brick wall this past week.

The PC in question is a 2nd gen Intel Core i3 system, running on a DQ67SW motherboard, with an Asus Geforce GT430 dedicated GPU in place. Installing Kubuntu 23.10 wasn’t a problem and everything worked perfectly except for one thing – the cooling fan on the GPU ran at 100% using the open source Nouveau drivers. My research and fiddling didn’t make an improvement unfortunately. It also may be that Nouveau can’t control the fan on the Asus card in particular, as a note on one of the Nouveau pages says it can’t control I²C bus connected fans. I couldn’t in good conscience sell this PC with the fan running like that, so that left me looking at the official closed source Nvidia driver. This is where the pain/fun started…

The GT430 card is supported up the 390 driver version, which is not available for Kubuntu 23.10. More reading online pointed out the fact that Nvidia has ended all support for these old cards (totally understandable) but that the driver series is also abandoned. Because of this and of how Linux works, the 390 driver doesn’t work with kernels beyond version 6.2 apparently. Since an older kernel like this wasn’t available in the repositories for 23.10, I stepped back to 22.04, which has a wider range of older kernels available but also supports the 390 driver.

I downgraded to the 5.19 kernel and installed the 390 driver without much of a fuss. The GPU fan spun down to reasonable levels and the system appeared stable enough. Just one problem though – I had lost audio output on the onboard audio. Error logs showed an error about how the device couldn’t be configured during boot, so it was disabled. This was a showstopper, so I had to keep working. I knew the 6.5 kernel in 22.04 worked fine with the audio, so my thought was to see if a 6.1 kernel would solve the sound issue whilst still letting the GPU work with the 390 driver. The 6.1 kernel did not fully fix the issue – it would now properly load a driver for the sound card, but would complain about missing codec support. Different issue, same outcome of no audio.

No matter what I tried, I wasn’t able to get the onboard audio to work. Eventually I just gave up and went rummaging in our storeroom, where I was able to find a very basic C-Media based PCI sound card which I installed. I disabled the onboard audio, disconnected the front panel header and booted back up – lo and behold the audio worked immediately with the dedicated card.

Whilst the PC is now ready for sale, I still feel frustrated by the whole mess. I know a large part of it is due to Nvidia’s closed source driver, but the blame also lies with the Linux kernel devs who don’t want to create a stable API/ABI, not to mention the zealots who want all pure open source code for all drivers. I can’t blame the Nouveau authors for not fully supporting the card, as this thing is 15 odd years old and I don’t know if anyone even cares about such old hardware at this point to bother with improving and truly completing the drivers.

The funny thing is that even though the hardware is now ancient, I can install Windows 10 22H2 on the machine and install Nvidia’s 390 Windows driver to get the card going at least. Sure performance is horrible and the driver is abandoned and possibly even a security risk, but it works thanks to the stability of the API/ABI.

Rant aside though, this may also just be a very unique situation thanks to the GT430 card. I’ve prepared other PC’s with a Geforce GT610 card and the cooling fan on that never ran at 100% under Nouveau – the 610 cards were made by Gigabyte vs Asus for the 430, so maybe Gigabyte used a way to control the fan Nouveau can actually use. Thankfully I don’t have many of these ancient pieces left, so future systems will either be sold off with a GT610 or 710 card or onboard Intel graphics, all of which should just work properly without any fuss. I’m also entering the stage where machines being sold off are now all UEFI based and not old school BIOS or the fussy early UEFI implementations pre Intel 70 series chipset.

When optical disks suck…

I recently purchased a brand new Pioneer Ultra HD compliant Blu-ray writer for my PC. In terms of optical media capabilities, I believe it’s capable of reading and writing to anything that is still relevant on these kinds of disks in 2022, which makes it a great backup unit for my decade old LG XL drive. What is the point of this intro? Simply put, anything but Blu-ray in optical media terms is dead to me.

I have a spindle of burned DVD-R disks that contains many older games (pirated from my younger days to be honest) and I thought that it would be a good idea to consolidate many physical DVD’s down to 1 or 2 Blu-ray disks. Near a decade ago, most of these games were burned to CD and I simply created ISO images of each disk, which was then burned to DVD to save physical space. I have long been aware of the issues with burned media failing to read after some time had passed, but as usual I didn’t think the problem would hit me personally until a few more years had passed. Sadly, the issue has hit me and it hit me a lot harder than I was expecting.

So far, 3 of the disks in the spindle have developed unreadable sectors, causing those disks to be unsalvageable. As mentioned, the content is old PC games, most of which I can now legally purchase from a site like GOG.com – not only would that be supporting a good company, but it would also ensure that the games actually work on modern PC’s and have no horrible and possibly non-working with more modern Windows copy protection on them. The problem however is for games that aren’t available on GOG and there’s no other real legal source for them, but then again I am not going to be going back to pirating the games and to be honest, most of them are so old that it’s not even worth my time and effort in the end.

This little experience though has shown me why optical media in general has basically fallen by the wayside and won’t be having a come back the way vinyl or photographic film has. CD-R and RW disks are basically limited to 700MB and dual layer DVD to about 8.5GB. Not only is that capacity seriously low in this modern era, read and write speeds are also really frustrating. Even a good USB 2.0 flash drive will read and write files faster than any DVD drive and will also be silent when doing so, not to mention being able to have stupidly large capacities.

That being said, I’m still a fan of Blu-ray – mostly for movies but I am coming around to it on the PC side as well. Granted I don’t know the long term stability of burned Blu-ray media just yet, but by all accounts it has got to better than DVD and CD, formats that date back from the 90’s and 80’s respectively. I must admit though that the process of burning my 1st BD-RE disk wasn’t a lot of fun. I copied and pasted files onto the disk as if it were a giant flash drive and whilst Windows had no problem doing this and burning the disk, the write speeds were slow and both my drives did a lot of spinning up and down whilst creating the BD-RE. It doesn’t help that the disk I was using was limited to only 2x speed. I’ve burned a BD-R before at close to 6x and that was a much better experience. Nonetheless, I managed to consolidate a large pile of DVD-R disks down to 1 BD-RE disk.

Whilst burned media is far more likely to cause the issues with readability like I mentioned above, commercially pressed disks aren’t immune. I’m currently struggling to make an image of my store bought copy of Medal of Honor Airborne for example. According to ImgBurn, layer 0 of the disk read just fine, but transitioning over to layer 1 and the read speeds have cratered. The disk is thrashing a lot in the drive and whilst there are no read errors currently, the disk is reading files 32 sectors at a time or roughly 44 kB/s. This makes me wonder just how many of my other disks may have developed issues that I don’t know about…

In closing, I’m feeling a mixed bag of emotions about optical media. Having a game or movie or TV series on disk means that you own the contents and that it can’t be arbitrarily taken away from you like media content on a streaming service can be when the rights owners decide they want to build their own platform or want more licensing money. Games and software that require activation is another story as if those activation servers go offline, you are sitting with a piece of useless polycarbonate plastic. Most people don’t miss optical disks anymore and with good reason. Optical disks can be good, but when they suck, man do they suck.

Updating the firmware on Ricoh MFD’s

In the not so old days, getting firmware updated on your multi-function devices a.k.a the photocopier required booking a service call with the company you leased the machine from, then having a technician come out with a SD card or USB stick and update the firmware like that. It was one of those things that just wasn’t done unless necessary, as by and large the machines just worked. Issues were usually always mechanical in nature, never really software related, so the need to update firmware wasn’t a major issue per se.

That being said, modern MFD’s are a lot more complex than machines from a few years ago. Now equipped with touch screens, running apps on the machine to control and audit usage, scanning to document or commercial clouds and more, the complexities have increased dramatically. Increased complexities means more software, which in turn requires more third party code and libraries. If just one of those links in the chain has a vulnerability, you have a problem. Welcome to the same issue the PC world has faced since time immemorial.

I can’t state as to what other manufacturers are doing, but I discovered that Ricoh bit the bullet and made a tool available to end users a few years ago so that they can update the firmware on their MFD’s themselves, without requiring a support technician to come in and do it. This saves time and money and also helps customers protect themselves since they can upgrade firmware immediately rather than waiting on the tech to arrive. Some security threats are so serious that they need urgent patching and the best way to get that done is make the tools and firmware available.

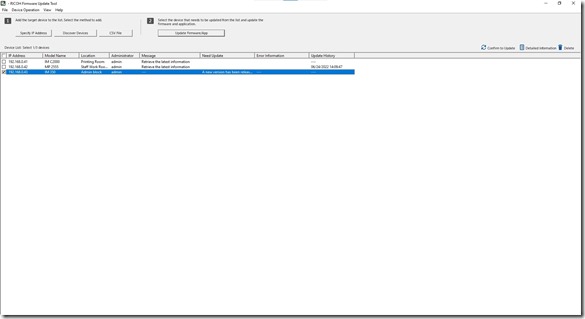

Enter the Ricoh Firmware Update Tool:

The tool is quite simple to use, though it is Windows only at this point in time. Either enter in your MFD’s by hand, or let the tool scan your network for compatible devices. Ricoh has a listing of supported devices here, which is a rather nice long list. I would imagine that going forward, all new MFD’s and networked printers released by Ricoh will end up being supported as well. I can understand that older machines won’t be on that list as they weren’t designed with that sort of thing in mind.

One the device(s) has been discovered, you let the tool download info from Ricoh to determine what the latest updates are. The tool will tell you under the Need Update column if an update is required or if the machine is on the latest version already.

Once you are ready, you click on the Update Firmware/App button, which brings up the next screen:

You can click on a device and click Detailed Information to see more info about the copier including the existing versions. Clicking on Administrator Settings lets you change whether to just download the firmware into a temp folder, download and install in one go, or install from a temp folder, downloaded from another PC perhaps. Once you’ve made your choice there, clicking the Execute button will start the update process. The tool offers solid feedback at every stage along the way. The firmware is download and pushed to the machine(s), where they then proceed to digest and install the firmware. There’s a number of steps that happens on the devices themselves and it can take between 30 minutes to an hour per machine to complete.

Once completed, you can see the results by clicking on a device in the main window and selecting Detailed Information. This will bring up this final window which contains a change log of what was updated:

As you can see above for our MP 2555 device, the update was pretty substantial. This makes sense as we’ve had this copier for almost 5 years now and it’s never been updated before this. 3 out of the 4 devices on the network needed to be updated, which has since been completed. The process was painless, if just time consuming – there are a lot of different parts of a MFD that needs attention so it’s understandable in the end. MFD’s have become a bigger security issue over the years and making firmware updates available for end users to do it themselves is a big step forward in the right direction. I hope that all manufacturers in this space end up doing the same thing as quite frankly having a tech come out to update firmware is a very old and inefficient way of doing things in this modern connected world of ours.

PC RGB is a mess

I recently performed a bit of an upgrade on my personal PC, namely transplanting the innards from my old gigantic Cooler Master Cosmos II Ultra tower case into a much smaller Cooler Master CM 694 case. Besides being a decade old, the Cosmos II also lacks a window on the side and doesn’t have any front mounted USB-C ports. The CM 694 offers these amenities in a case that is a lot more friendly on my desk compared to the old behemoth.

As part of this upgrade, I decided to finally go full RGB and get everything matching as much as is humanly possible. What I quickly discovered is that the PC RGB space is a royal mess. Different connectors and different software packages for control are frustrating enough to deal with, but peripherals being largely incompatible between software packages is the real killer annoyance. It’s sort of understandable in the sense that each manufacturer wants to lock you into their ecosystem of devices, but the ideal situation may not go this way. For example, you may want an Asus motherboard with an EVGA graphics card, with Corsair RAM and case fans, Logitech mouse and Steel Series keyboard. All of these support beautiful RGB, but you would have to run at least 4 different software packages to control all the effects, which eats system resources, introduces potential security holes and can lead to the RGB programs not working correctly as they fight each other for control of items. Not to mention, if you aren’t running Windows, your options are seriously limited.

There’s a good video on YouTube that explains much of this as well as taking a good long look at the types of connectors you would commonly encounter on case fans and RGB strips:

However, this video doesn’t get into the annoying software side of things in that peripherals such as keyboards, mice, headsets, mouse pads, headphone stands etc are generally locked to whatever manufacturer ecosystem exists i.e. Asus mouse won’t work with Logitech software.

Thankfully, people got annoyed by this and there are at least 2 promising software solutions out there – OpenRGB and SignalRGB.

OpenRGB is open source software that aims to support every possible RGB device in one piece of software, across Windows, Mac and Linux to the maximum possible extent. Their list of supported hardware is long and grows almost every day.

SignalRGB has a similar goal, but is closed source software and only runs on Windows at this point. The hardware list is very impressive and like OpenRGB, seems to grow every day. The program also comes in two versions, one free and one paid that includes more features such as game integration and additional features.

That being said, both SignalRGB and OpenRGB are reverse engineered products and should something go wrong, the original manufacturer can be petty and refuse to honour a warranty if they find out you were using a 3rd party program for example. Also, some manufacturers really cut corners in their RGB implementations, so neither program will have good control over those devices – ASRock motherboards come to mind here for one thing.

It is my hope that eventually someone big steps up and forces an industry standard, but as with anything in the PC space, this seems unlikely – the USB interface for peripherals alone means that it’s too easy to get an ecosystem going and try to tie your users down into it. I guess that the two software applications above really are your best bet for cross brand RGB, but it still doesn’t solve the issue of needing the vendor software installed to update firmware, set configuration settings for keyboards or mice, things that can only be done through the original software. The other alternative is to stick to one vendor, do your research and get products that are guaranteed to work with each other.

Gigabyte Q-Flash Plus to the rescue

This past week, I built yet another new computer for my school. Nearly identical to the last build that I did for the school, the main difference was that I went with a Gigabyte B550M Gaming motherboard instead of an Asus Prime motherboard. Why? Simply put, to avoid the issue I had last time where the motherboard wouldn’t post with the Ryzen 5000 series CPU in it. The crucial difference between the two motherboards is that Gigabyte have included their hardware based Q-Flash system on the motherboard, which lets me update the UEFI even if the UEFI is too old to boot the new generation CPU’s.

That little button on the motherboard is a life and time saver. Of course, the concept of this isn’t exactly new – Asus had a feature like this on their higher end motherboards a decade ago already. However, it’s one of those absolutely awesome features that have taken a long time to trickle down to the budget/entry level side of things and to this day, many motherboard still don’t sport this essential feature even though it would drastically improve the life of someone building a computer. There’s nothing quite like spending time building a PC, getting excited to hit the power button and all of a sudden seeing everything spin up but output no display signal. That scenario makes you start to question your sanity.

So what does it do? Simply put, whilst your motherboard may support a new generation of CPU’s, it more than likely requires a new firmware to do so. This becomes a chicken and egg situation whereby you buy the motherboard and CPU, but the motherboard came from the factory with the older firmware on it and as such can’t boot your new CPU. In the past, the only way to get around this was to use a flashing CPU, i.e. a CPU that the motherboard supports out the box and flash the firmware, remove the flashing CPU and then put in your new CPU. This of course works, but is tedious and does increase the risk of damages in the sense that you are now doing twice the amount of CPU insertion and removal.

Q-Flash Plus and other systems like it basically let you flash the firmware, even if your system has no CPU, RAM or other components installed. I used it to great success this week to get the new motherboard flashed to the latest firmware. The process is pretty easy and goes like this:

- Connect motherboard to your power supply correctly, so 24 pin and 8 pin connectors.

- Use a smaller capacity flash drive. Format it with FAT32 and place the latest firmware on the drive. Rename the file to GIGABYTE.bin

- Place the flash drive into the correct port – this is usually just above the button, but check your motherboard manual to be sure.

- Press the button once and sit back. The LED indicator near the button should start rapidly blinking, as should the USB flash drive if it has an indicator light. After a few minutes, the PC powers up whilst the Q-Flash indicator blinks in a slower pattern.

- When done, the PC in my case restarted itself and posts properly.

In my case, the PC restarted after the flash completed, but I already had all the components installed. Others flash the system completely bare, so in that case the motherboard may just turn off when complete. This isn’t something I have a lot of experience with, so your results may vary.

After using this to get the new PC up and running, I’ve come to the conclusion that it is a feature all motherboards should have, no matter what level they occupy. It’s one of those features that is just too handy, too useful to be reserved for higher end motherboards only. It no doubt does add some expense to manufacturing, but again, it’s one of those things that is just too handy to have.

Fun with a new Ryzen PC

This past week at work, I built a brand new PC for the school for the first time since I’ve been there. For pretty much all previous PC builds, I’ve used a past pupil of the school but since he became completely uncompetitive on laptops, I started sourcing those from other vendors. After sourcing costs for this new PC from our local e-commerce giant Takealot, the pricing was about what I would have paid the guy anyway, so I decided to just build the PC myself.

There was a delay on the order itself due to 3rd party vendors struggling a bit (thanks global supply chain mess!) but eventually all the parts arrived. A modern PC has even less parts and cables than ever before, so building one is faster and neater than ever really. I went with an AMD Ryzen 5600G CPU, Asus PRIME B550M-K motherboard, 8GB DDR4 3200Mhz RAM, 250GB NVME SSD, 350W power supply and a surprisingly nice Cooler Master chassis. Nothing else, as I already had the keyboard, mouse and monitor in place at school. Not going to set the world on fire performance wise but a very nice powerful system by comparison to the rest of the PC’s in the school.

Just one problem though – the motherboard supports 5000 series Ryzen CPU’s, but generally with a pretty high BIOS revision. I knew that this could pose a problem if the firmware wasn’t up to date on the board. It’s luck of the draw and depends on the date of when the board was manufactured. If an early production run board has been sitting on the shelf for two years, the BIOS revision flashed at the factory will be much older than what has been released since. Such was my luck, as the motherboard was sporting revision 1202, whilst the CPU needed revision 2403 to POST and work.

Now what to do? I’ve got a brand new system out the box with components that all seem to be working, but I’m not getting any POST. The fans spin and the small onboard RGB strip glows, but no output on any of the display ports. Returning the motherboard wouldn’t really do any good and would take a lot of time with no guarantee that a swapped board would be up to date. The solution? Drop a compatible out the box CPU into the system and flash the board to the latest firmware version, then drop the new CPU back into place and voila! Luckily for me, we have another Ryzen system in the school, using an older 3200G CPU.

Popping the 3200G into the B550 board worked like a charm, I had a POST at the 1st power on attempt. From there it was really just a case of getting into the Asus EZ Flash feature on the board and updating the firmware. Nothing I haven’t done hundreds of times before. After the flash was done, swap in the new CPU and cross fingers. Thankfully the new CPU posted just fine and it was now just a matter of screwing coolers back down in both systems and closing the cases back up.

That being said, after the flash was done, I looked at the B550 motherboard box and noticed that it specifically said that the 3200G and 3600G CPU’s weren’t supported by the board! The reason for this would be that despite being named in the 3000 range, those two CPU’s are actually from the earlier 2000 range Ryzen CPU’s, but have the Radeon GPU bits added in. The AMD B550 chipset apparently doesn’t support pre 3000 series Ryzen CPU’s. Score one for AMD’s increasingly confusingly named CPU/APU series…I was just glad that the flash worked regardless, as I could have ended up in a situation with a bricked motherboard.

AMD used to offer something called a boot kit in the past where for situations exactly like this where they would send you a really low end CPU that was just enough so that you could do the flash. Unfortunately I think that program ended a long time ago, not to mention that the flashing CPU wouldn’t have been supported in this board either. The only real solution these days is to either have a motherboard with some sort of offline flashing capability “flashback” or to have a compatible CPU on hand to use for flashing. Either of those options unfortunately incur a cost, as the CPU would cost extra to have on hand to use all of once, whilst the “flashback” feature is something that hasn’t quite worked it’s way down to the entry level and budget motherboard segment as yet.

Nonetheless, I feel I still have a good solid combination of hardware with the PC and I am confident it will have a long life in service at the school. It’s also going to be the 1st of quite a few more that I build going forward, budget depending. I may just need to look at a slightly more expensive motherboard that has a “flashback” type feature so that I don’t need to go disassembling PC’s to swap out CPU’s every time!

The Great SSD Project

In this year of 2022, no client computer should still be running with its operating system installed on a mechanical hard disk drive. Conventional spinning drives are just not fast enough to provide a good user experience with today’s software when used as the primary drive. Watching a computer grind itself into the ground as it chews through a Windows update or upgrade is painful, not to mention random slowdowns when Windows decides to hammer the drive for some or another reason.

Knowing that a SSD can extend the life of an older PC by quite a bit, I embarked on a project a few years ago to replace all client PC hard drives with SSD’s instead. It’s been a slow project, limited to replacing about 10 drives a year due to budgetary constraints. Thankfully SSD’s have fallen in price since I started which has enabled me to be able to get to the point where I can purchase many more units at a time and accelerate this project. Having made use of drives from Samsung, Transcend and Western Digital, the project is now entering the end stages with only 5 commonly used PC’s remaining for the swap in the school. I do still have a small computer lab of 14 PC’s that would benefit from the upgrade as well but I have placed that room at the bottom of the priority list as it isn’t used daily.

The cloning process has to date been a bit painful when using Macrium Reflect inside Windows, with clones taking anywhere from 2 hours and up. I’ve since managed to create a Windows PE based version of Reflect which runs quite a bit faster. Regardless however, one can truly feel the sluggish performance of these old 500GB hard drives I am replacing when the clone process happens as the mechanical drive just have no performance. It’s no wonder the PC’s were choking during daily use with these drives grinding themselves into oblivion. Make no mistake, a ten year old 3rd gen Core i3 PC is never going to be a speed demon, but even with a cheap SSD in it, you unlock performance you never knew the PC was capable of.

Even with these upgrades, the old class and office PC’s are well into their twilight years and won’t last forever. CPU performance has come a long way in 10 years and these PC’s will need to be replaced. That being said, never again will a PC be purchased for the school that contains a mechanical hard drive. These days all PC’s or laptops have to be equipped with a NVME based SSD for the best possible performance. Whilst NVME drives are still pricier than SATA based SSD’s, there’s enough performance increases to be totally worth it, not to mention the super convenient M.2 socket.

Thankfully these days many PC’s and laptops are built with a SSD as default, though far too many lower end laptops still unfortunately come with a mechanical drive by default. I am really hoping that trend ends much sooner rather than later as one should not have to open up a newly bought laptop just to swap out the mechanical drive for a SSD in 2022.

Now all that’s left is to format and clean 20+ 500GB mechanical drives and somehow find a use for them or get them sold. Easier said than done though…

The mSATA experiment

When it comes to storage options in this modern world of ours, M.2 NVME drives are king. Physically small & compact, the drives offer excellent speeds and reduce cable clutter inside a desktop PC and saves space, battery life and heat inside a laptop or tablet. However, ten or so years ago, M.2 did not exist as a slot, but there was something else: mSATA.

Mini-SATA never really took off in the consumer space, but did sort of have its 5 minutes of glory when Windows 8 came out and manufacturers were falling over themselves to make some form of tablet to go with the new OS. Traditional 2.5” hard drives wouldn’t work obviously, nor would a drop in standard 2.5” SSD. Soldering the storage chips to the mainboard was always an option (much like how the iPad does it) but many manufacturers instead turned to mSATA to do the job. It was expensive (all flash based SSD products were back then) and capacities were limited (again, as all SSD’s were at the time).

We have 39 PC’s in our school from the 2012-3 era that were given to us by our provincial Education Department to make up our Computer Lab at the time. These PC’s have an Intel DQ77MK motherboard in them, which has a mini PCIE slot in them that accepts mSATA drives, as well 6 normal SATA ports. Unfortunately the mSATA slot only works at 3Gb/s, which does really sort of limit what the PC can do with an mSATA SSD in it compared to hooking up a 2.5” drive to the 6GB/s SATA port.

I have been replacing the 500GB Seagate mechanical drives in these computers with normal 2.5” SSD units for a while now, but I recently took the chance to order a mSATA drive to put into one of these PC’s – I actually ordered the drive more as an experiment for myself than anything else.

Here’s the KingFast 256GB mSATA SSD I ordered. The screw holes at the top are slightly bulging as I tried and forced through screws that were too tight for the holes. The drive did not come with any mounting screws, so I was trying to find screws to use when mounting the drive inside the computer. Much to my chagrin, the correct mounting screws were actually inside the PC already, screwed into the posts that the card would rest on. Oops… Luckily no damage to the card, just a little visual ugliness to the screw holes, which obviously wouldn’t be seen once the side panel of the case was back on.

Once the card was installed in the PC, the 1st boot up and return to Windows ended up with the PC not detecting the card for some reason. I rebooted and went into the UEFI to find the card was now visible. Back once again into Windows to start the clone process from the mechanical hard drive to the SSD using Macrium Reflect. Unfortunately the entire process took close to 6 and a half hours. I don’t know why, but I have never had good speeds using Macrium to clone drives in my school, but the mechanical drive in this PC was suspect as well. What made it worse is on that day, our country started implementing rolling blackouts again and my school area was scheduled to be hit in the evening. Without intending it to be so, it had become a race of clone vs blackout. Thankfully, the clone did finish in time, so I could shut the machine down safely from home.

The next day I went up to the classroom and removed the mechanical drive and SATA cable. Put the PC back on its shelf in the teacher’s desk and the system booted from the mSATA drive with no issues. General performance in Windows is light years ahead of the mechanical drive, but it’s not quite as snappy as what a SSD with a 6GB/s link would be. Still, it’s more than adequate for the particular classroom until the room eventually gets a new computer. As for the mechanical drive I took out, I am pretty sure that the drive is dying, as nothing else can explain such putrid performance.

This will be my only real experiment with mSATA I think. None of the other types of computers in the school support it and the cost of the drives are higher than equivalent capacity 2.5” drives. Besides, M.2 drives have since superseded mSATA in every possible way that matters. This really was just to satisfy my curiosity in the end more than anything else.

Juicing a Dell Inspiron 3593

“Ah my laptop is so slow! I’m going to throw this thing against the wall!!”

How often have you heard complaints like and similar to this and over the years? I’ve lost count by now. Which brings me to this post and how having a touch of knowledge can save money and make people a lot happier.

For most people, buying a laptop appears to be a major investment akin to buying a car. Not only that, most people decide that they need to penny pinch when buying the laptop, only to curse and scream when the thing is slow and doesn’t run like their friend’s MacBook does. Cue the “Apple make the best laptops” line or something similar.

The fact of the matter though is that for some reason I can’t understand, manufacturers still haven’t gotten the idea into their collective heads that no laptop should be shipped with its OS on a mechanical spinning hard drive. Having spinning rust is great for extra internal storage, but no OS should ever be forced to run off one of these anymore. The experience is downright terrible as Windows, Linux and macOS have all increased in size and complexity over the last years and spinning rust can no longer provide a sane usable work experience.

I do think that for most manufacturers, it is still unfortunately cheaper to include a spinning rust drive even if the rest of the laptop has really decent and usable specifications. That and/or the fact that bigger numbers tend to impress people more thanks to marketing. Two identical laptops, one has a 1TB mechanical drive and the other a 256GB SSD. Unless you know a bit about computers, most folk are going to go for the 1TB drive as it’s bigger and biggest must be better right?

The situation is improving though thankfully, but it’s still not the default yet to get a SSD in a entry level to low mid range laptop. Even though a cheap 240 or 250GB SATA SSD would literally double customer satisfaction ratings in this sensitive price bracket, the mechanical still stubbornly reigns as the default. I can only hope that with Windows 11 out soon, more manufacturers get the point and leave the mechanical in only the absolute lowest of the low devices. A small knock in profit margins would be more than offset by happier customers and less returns thanks to the speed of the SSD.

All this ranting finally brings me to my point. A colleague of mine owns a Dell Inspiron 3593 laptop with 10th Gen Core i7 with 8 cores, 8GB RAM, Full HD screen, DVD writer, LAN port, dedicated Geforce MX graphics and a 1TB mechanical drive, all wrapped in a nicely built and not too heavy chassis. Just one problem – the machine was running slower than a snail buried in concrete. Boot time to desktop, opening any application, you name it was an exercise in patience. Task Manager constantly showed 100% usage on the hard drive. I knew that this laptop could be capable of so much more. When the head of the maths department got me to look at new laptops, I told her that my colleague didn’t need a new one, just 2 parts to unleash the beast so to speak. One extra 8GB stick of RAM and one 500GB Samsung 980 NVME drive later and the laptop is keeping pace with the cheetahs now.

As a bonus, the mechanical drive stayed in and is now bulk storage for my colleague. Boot time went from almost 3 minutes and 25-30 spins of the Windows spinner down to under 20 seconds and 3 spins max. My colleague literally couldn’t believe her eyes when she saw that and felt the performance difference in the laptop. It literally was like a totally difference device was wearing the skin of the old laptop.

In closing, 2 simple upgrades that didn’t cost nearly as much as a new laptop not only saved us that cost, it prevented e-waste and made someone very happy. To paraphrase a tired old joke, the screw costs 10 cents but the knowledge of where to use the screw and how many turns to tighten the screw correctly costs $10000…

Thoughts on the AMD Ryzen 3 PRO 4350G

Apparently this APU is not meant to be sold to the general public as a standard SKU but is restricted rather to OEM’s who build complete PC’s and sell those. My guess is that thanks to the great chip squeeze of 2020/1, AMD decided to enact this policy and focus rather on getting the Zen 3/Ryzen 5000 based APUs out to the public at a forthcoming stage.

The PRO branding simply means that some business security features have been added to an otherwise base APU, similar to what Intel does with v-Pro on some of their chips. Having not used v-Pro or the specific Ryzen PRO enterprise features on offer in any meaningful sense, I’m not particularly concerned if they are effective or not. All I was looking for was an entry level AMD CPU with built in graphics that wouldn’t break the bank and had just enough features to be something that could last for another 5+ years.

AMD have admitted that due to the silicon shortage, they have been focussing on the higher end chips that bring in more revenue for them. As such, finding something based on Zen+/ Ryzen 3000 APU has been nigh on impossible. Every local online shop I tried simply did not have stock and had no indication of when/if they would be able to restock. This was a problem as I was busy rebuilding my dad’s PC and I need an APU to complete the project. I was getting seriously frustrated and then a search one day turned things around out the blue.

Searching on Takealot, I found this Ryzen 3 PRO 4350G APU available, seemingly out the blue. I put the order in for the chip, along with the other parts I needed to finish my dad’s build. After waiting about 2 weeks for everything to show up, I went to go collect, only to discover that the APU had been cancelled out of my order. Yet when I followed the links, it was marked as on sale again in the store. Thankfully the refunded store credit matched the price and I immediately re-ordered the chip. 2 day later the chip was delivered to my work without any fuss. Unlike the retail boxes, I got a very plain box with no external markings of note. Included was a SR-2 cooler and the chip itself wrapped in some foam. I wasn’t impressed by this as that is just begging for the pins to be bent.

Sure enough, when I attempted to install the chip, it wouldn’t fit into the socket. Resisting the urge to force it in, I got a business card and ran it through the rows of pins where I saw the most resistance. It took a couple of tries but I was able to eventually straighten the bent pins enough that they went into the socket. Apart from my Ryzen 2700X, I haven’t worked with CPU’s with pins since the Pentium 3 days (I never fiddled with Socket 478 for Intel or any AMD socket prior to my 2700X)

Once powered up, the annoying ASRock motherboard detected the APU and has worked solidly ever since. I did have to flash the board first with my own 2700X to get the firmware updated so that it would support the APU. Unfortunately despite being a high end motherboard, ASRock did not see fit to include a BIOS Flashback type tool where you can update firmware without any other components available. Having that would have saved me a good few hours to say the least.

In the three weeks since the build was finished, the system as a whole has run super well. Thanks to the advancement of technology, the chip has the same 4 cores / 8 threads as the previous Intel chip based PC had, but is so much more powerful it’s not even funny. Power consumption as well is more than halved – proof enough of this is feeling how much cooler the metal on the case above the power supply in the PC is – it’s cool to the touch compared to the heat generated with the old system. The chip itself has more than enough grunt for my dad’s office type workloads and has accelerated his day to day job thanks to the improved response of the system.

I doubt this is a chip that will ever get any form of retail love and attention going forward, as AMD have already announced the 5000 series APU’s that are based on the Zen 3 architecture. That being said, this is a competent little chip that is more than powerful enough for any office or light work loads you may need. Since the built in graphics is decently powerful, you also don’t need to include a PCIE graphics card, reducing heat and lowering power consumption. If you are looking to build something entry level, this chip is seriously highly suggested.