Archive

Coming Soon

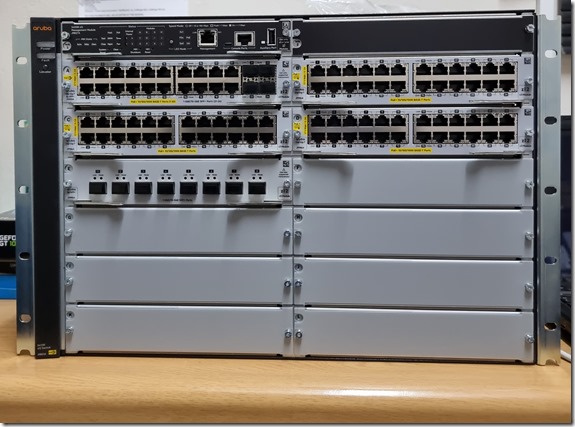

It took about 6 months to arrive thanks to the global chip shortage hammering all parts of the electronics industry, but our new Aruba 5412R switch arrived, to my immense joy. Behold our new core switch:

As written about previously, this new switch is replacing 4 older switches in our network core. 3 of those switches will be retired, while one will move to another venue, replacing an older 2610 model. Once that happens, I will have no more switches that still use Java in their interface, which is a big step forward. Accessing management web pages with Java apps on a modern PC isn’t easy and this will go a very long way forward to completely removing Java from my PC at work. I still have a server remote KVM that runs on Java unfortunately, but I hope to replace said server next year with a new server that has HTML5 remote access instead.

Back to the switch above. Whilst it may look big and complex, the web interface is the same HPE/Aruba interface I’ve grown to love the last 5 years. Everything is immediately familiar including the configuration on the command line, unlike the cx6100 series switches that use a new OS. Once the switch is installed in the upcoming holidays, not only will a large amount of PC’s jump up to a gigabit link, my fibre backbone will also largely jump to 10gbit around basically the entire school. In the future, it would be easy enough to replace one of the modules with another to support such things as 2.5gbit links or QSFP connectors for 40gbit links and so on. Mixing and matching will be so easy due to the modular nature of this chassis. This chassis system is something a vendor like Ubiquiti is missing from their current Unifi line-up, which would prevent their kit from being used in some locations. Unifi has its own strengths and weaknesses, but now that I’ve handled a chassis switch like this, I am convinced it’s something they are missing in their line-up.

In a little over 2 weeks from writing this post, this big boy will go in and the biggest project I’ve ever undertaken at the school will essentially be complete. I still have some edge switches that need replacing, but those should be finished off next year. Eventually I would need to look at replacing switches again so that I could start getting 2.5gbit ports for Wi-Fi AP’s, but that is in the further future.

A bonus benefit is that being such a large machine is that the fans will run so much quieter than the existing 40mm screamers in 3 of the 4 switches that are being replaced. The server room will be a lot quieter after this big boy goes in!

A BIG network change is coming soon

When I first started at my current school in 2009, I arrived with a very basic knowledge of network switching. I knew the difference between hubs and switches and I knew that some switches had a web interface you could use to configure the unit. My first job however only had unmanaged switches and the college I studied at didn’t have managed switches for students to play with. Fast forward to 2009 and I walked into a school that had 2 x HP ProCurve 2524 units, 1 x HP ProCurve 2900-24G and unmanaged D-Link switches everywhere else.

When my first colleague started a week after I did, he introduced me to what these ProCurve switches were and why they were not only so good, but that we would have to replace all the other switches in the school with ProCurves. At some point in early 2010, we managed to get a stack of ProCurve 2610 models to go around the school, replacing many, though not all of the D-Link unmanaged units. This combined with new, useful 6U cabinets and some patch and brush panels and the physical cabled network started to look a lot more respectable and far less haphazard.

As the years have rolled on since then, I fully completed the swap out of unmanaged to managed switches. Unfortunately one thing we did not anticipate at the time was the need for PoE that would come when we put in our 1st IP based CCTV cameras. Whilst we got a 2610-24 PWR model in the server room to centrally power some cameras, others had to be powered with PoE injectors, which lead to messy cabinets that ran hot thanks to having the injectors sit in the base of the cabinet. Let me not even start on the mess of power cables and adapters and multiplugs this entailed.

When the CCTV kept expanding, I realised that we were going to need to upgrade all the switches to PoE models. By this time the 2620 series was now in, so I started buying some of those models, but due to the increased price I could never do the whole network at once. This lead to the 2620 series being retired just after HPE bought Aruba and started integrating the product lines, which meant that I purchased one 2530 unit, which seemed to be superseded pretty quickly by the 2540 line. Just a few days ago, I attempted to purchase yet another 2540 unit, only to be told that they were end of life and the Aruba CX 6100 series was the replacement. Most of the network is now PoE compliant and we are using about 500 watts of power at any given moment on the network for CCTV, AP’s, phones and so on.

All the while that the edge network was being upgraded, the server room core has remained static with 1 x HP 2620, 1 x 2610-48, 1 x 2900-24G and 1 x 2610-24 PWR. This is far from ideal as the most of the fibre deployment in the school has been upgraded to OM-3 cable to give us 10Gb/s capability. This is probably still more capacity than we’ll need for years to come, but that’s another story. For the last few years I’ve been mulling the solution to bringing the core up to speed – a chassis based switch. Not only would it give a massive speed upgrade to the core, it would provide room for growth whilst not requiring more rack space. Throw in potential redundancy, quieter operation due to bigger fans and the ability to hot swap modules and the attraction to these units couldn’t be more obvious.

After weighing the pros and cons and looking at the options Aruba offers, I settled on the Aruba 5412R zl2 v3 chassis switch. It is a 12 module unit that is 7U high and can mix and match all sorts of modules – copper ethernet from 100Mb/s to 40Gb/s fibre and all sorts of things in-between. I found a bundle option which includes 92 gigabit PoE ports and 4 SFP+ ports. I had to add an extra 8 port SFP+ module to take care of our fibre as well as adding 2 power supplies. It’s not a cheap purchase, but I decided on slight overkill and go with the 12 bay unit vs the only other option which is a 6 bay unit. The 6 bay unit version just didn’t have enough expansion for my taste and since this switch is going to have to last 15 or more years, that is important to have.

The most major problem however is that due to the high cost of the unit, I wouldn’t be able to buy it in one go. Normally I’d put something so expensive in as a CAPEX request, but thanks to the school building a new wing a few years ago, CAPEX was essentially non existent as the school worked to repay the loan for that building work. I was forced to split up the cost of the unit over 2 years. Unfortunately the delay played havoc with pricing thanks to the R/$ exchange rate going south. I was despairing about being able to get the unit thanks to the quotes I was getting but thankfully one supplier came to the party and provided outstanding pricing.

I’m not sure when I’ll actually take delivery of the package due to the damned global chip shortage playing havoc with everything electronic as well as the very late in the year order. It could be late January next year or even going into February before it arrives at the school, which means that I will only be able to install it on a weekend really as we can’t take the network down for surgery during the day.

This is a stock picture of what the unit will look like, minus rack ears:

I will write another post once I’ve taken delivery of the device and have gotten it installed. Despite the flexibility of this chassis based switch, it still runs on the same sort of OS as almost every other Aruba and HP switch before it. Aruba is now introducing new switches that run on the ArubaOS-CX OS which technically runs on Linux rather than the custom OS that is ProVision. The CX-6100 model I’ve also ordered runs on this OS, so I will see what it’s like in comparison to the older ProVision based models. Long and short of it, it just means that it won’t take too long to actually commission this device software and configuration wise, taking into account that it’s replacing 4 switches. To me, that is a beautiful thing and I know I am going to have a huge smile on my face once this device is up and running.

Thoughts on CCTV and Dahua

When I first arrived at my current job in February of 2009, I had a minor touch of CCTV knowledge from my previous job. The previous school I worked at had installed 4 or so analogue CCTV cameras that fed into a capture card in a PC that did recordings and playback etc. Resolution was low and quality was worse as due to the long runs of co-axial cable the cameras often dropped the colour picture down into black and white. It was not a pretty setup to say the least.

My new job seemed to have had some sort of CCTV at some point before I arrived, as there were a number of analogue cameras mounted on the front and side of our school, with a cabling nightmare that seemed to terminate in the security hut at the front gate. From the ramblings of our security guard, I believe there must have been one of those small industrial type monitors in the hut that they used to view the cameras. That being said, so far as I could tell, nothing was running anymore in 2009.

Sometime either in 2010 or early 2011, the business manager at our school got some people in to look at things, after which they suggested going the IP based route. Unfortunately a lot of cable had to be run by ourselves as that cost hadn’t been budgeted in for the install. That was a painful patch I hope to never repeat again in my life! We also took ownership of our 1st PoE switch to power a chunk of these cameras from the server room, whilst the rest would have to settle with PoE injectors in the various cabinets around the school. PoE switches were expensive and not something we could willy-nilly put into place, especially as we had just replaced many of the unmanaged D-Link switches with decent HP ProCurve 2610 units not too long before this CCTV project started.

Nonetheless, the Axis cameras went in, Camera Station was loaded onto a server and we started recording the school. As time went on, extra cameras were added internally and externally until we ended up with about 33 cameras. The cameras paid for themselves by providing evidence in disciplinary hearings and so on, but there was a growing number of problems with our setup:

- Axis cameras were becoming more and more expensive to purchase or lease.

- Camera Station licenses were becoming stupidly expensive and was capped at 50.

- The resolution of most of our cameras was way too small to continue to be useful.

We were hobbling along until 2017 when our principal got schmoozed by a salesman at some conference. This office automation/copier company had expanded into CCTV and thanks to some persuasive talking by the salesman, our principal was determined to get them in to do an upgrade at our school. After getting mandatory additional quotes, the pushy company got their 5 year long contract. Such is how I was introduced to Dahua.

I had heard the name in passing before this when I read about how their equipment had been used in botnets to DDOS websites and services. I was apprehensive from the start about this system and it turns out I was mostly right to be concerned. Dahua has also found itself on blacklists thanks to Donald Trump, but that is another story.

The pushy company ripped and replaced our existing cameras which went quickly enough and then ran cable for all the extra cameras we had signed on for. I will say that the installing subcontractor did a very good job with the installs, they were neat and tidy and we had no issues with his workers interfering with our kids etc. I found the initial Dahua cameras to be a big upgrade resolution wise to the old Axis cameras and the build quality was also pretty decent. However, the management software side of things was and remains horrible.

Smart PSS is a horrible piece of software for managing your system, exporting recordings etc. It gets the job done but is buggy as hell and compared to Axis Camera Station, I found it severely lacking. Nonetheless, we got by. When our school built a new building wing the company was called in to expand the system and another 10 odd cameras were installed. We had now come to the point where we had filled the entire 64 channels on the NVR. With a remodelling of our sports pavilion and tuckshop in 2019, we had yet more cameras added to the system, necessitating an upgrade of the NVR to a 128 channel unit.

Since then the system continues to run, but we have had some minor issues. A couple of external cameras have had issues with weather sealing and subsequent faults, which have been replaced. A bigger issue that has cropped up however is the exact model of cameras we have.

When the original Dahua units went in, we were given units that had already been reached end of life status and were most definitely not current units in 2017. My guess is that the company had a lot of this stuff sitting in stock which they couldn’t move, so they made up specials to dump this stuff on unsuspecting schools. One model of camera hasn’t had a firmware update since November 2015, another since sometime in 2017. These 2 models comprise the bulk of our install, so we are left with cameras from a company with a known piss poor security track record that are not getting patched. Dahua’s answer to the problem mostly seemed to be buy a new model camera, although that seems to be slowly improving these days.

I am now looking at doing ad-hoc replacements of these older cameras from next year to at least try and maintain the security of my network. Thankfully since all the infrastructure is already in place, it is a straight swap and configure basically. I am also looking at implementing Dahua’s DSS Express software, which seems to be where they are focussing their attention on going forward compared to the horrid Smart PSS. In the next 2 weeks, I will be looking at doing a firmware upgrade on the NVR as well, as the current firmware is is close on 5 years old.

Without making this post longer, let me just say that I have learned some new things that I will talk about shortly in a follow up post.

Rest in Peace ZoneDirector 1200

Above this writing is a generic image of a Ruckus ZoneDirector 1200 wireless LAN controller. Ruckus Networks used to market multiple ZD models to meet the needs of various sized networks, but one by one they all were retired, leaving the ZD 1200 as the only remaining model. When the upgrade to v10 of the controller software arrived, it raised the maximum number of access points the controller could manage to 150, which may explain why the other models were all retired. Inside the chassis is a small single board type PC with a 4GB Compact Flash Card, 2GB DDR3 SODIMM stick and I am guessing some sort of ARM CPU with a cubed metal heatsink that attaches to the top lid of the chassis.

We’ve had a ZD 1200 since our wireless network was installed at our school at the end of 2015. It’s seen multiple firmware/OS upgrades, a downgrade due to bugs in the 10.4 branch and lots of powering on and off due to out country’s less than reliable electrical generation capabilities. For the first 2 and bit years of its life with us, the ZD had it very quiet, as we weren’t ready to use the Wi-Fi network as we didn’t have a fast enough internet connection. Eventually we got a better connection and Wi-Fi access was slowly ramped up after managing to sort out VLAN and 802.1x authentication as well. From that time onwards the ZD had a busy life.

On Wednesday 17 February 2021, I left and went home for the day. Sometime between 16:00 Wednesday afternoon and 08:00 Thursday morning, the unit suffered a catastrophic and fatal hardware failure. Wi-Fi was not working when I got in on the Thursday morning and after getting to investigate, the unit was not responding to pings or was visible on the network. I hard power cycled the device, only to be greeted by a high pitched constant squeal. I power cycled again. Squeal was gone but now the device was utterly frozen.

I connected the a console cable to the console port, set the settings right in PuTTY and tried power cycling and connecting. Nada. Status, Power and the network LEDS in port 1 were all on solidly green. Moving the network cable to port 2 extinguished the LEDS in port 1, but the same solid green behaviour happened on port 2.

This of course happens at the worst possible time for us as a school, as it was the 1st week back for the students for the 2021 year. We’ve just gotten seriously into using Teams and getting kids connected to the Wi-Fi so they can work, only to be greeted by this failure that has now thrown an ugly spanner in the works.

Anyway, I took the unit out of the rack and opened it up to have a look see at what’s happening inside. I could see no blown capacitors, scorch marks, a burned odour or any other tell tale sign of hardware failure. Whatever the issue was, it was bad enough that even removing the Compact Flash card and RAM and then booting made no difference – still solid green everything. Normally if you boot a computer without RAM it will beep, but this did nothing, so whatever the fault is it’s seriously low level.

Reading a bit on Ruckus’ forums, it seems other people have suffered the same issue and there is no known fix. If the device is under warranty, opening a RMA claim seems to be the only course of action. My theory is that the CPU dies due to poor design or electro migration, though that’s just a theory. I lack the tools and skills to do low level diagnostics on something like this.

That being said, I’ve chosen to go a different route for the fix. We are going to purchase the virtual Smart Zone network controller from Ruckus, which is essentially a Linux based VM that will do the same job as the hardware controller was doing. The Smart Zone controller actually does more than ZoneDirector does and is responsible for managing Ruckus network switches as well, something that is of no use to us as we use Aruba/HPE switches. Based on what I can see, it really appears that this is the direction Ruckus is heading anyway for the future. There’s been no new ZD units released in years to replace the older models. It seems Ruckus is following an industry trend towards software defined networking and controllers, rather than hardware based appliances. This makes sense in most cases, as with a VM based solution you can easily increase RAM, CPU count, extra network interfaces, have longer term storage space etc. Not something you can do with a hardware controller…

Hopefully I’ll have the vSZ in place this week, ready to go once we’ve paid for it and licenses have been issued to us. That’s another story that is utterly annoying about Ruckus equipment, but that is a story for another time.

RIP ZD 1200, 2015 – 2021. You were a good piece of kit and did an admirable job these last few years. Go now and rest with your brethren in the great hardware heaven in the sky.

Buggy Ruckus firmware

We’ve had Ruckus Wireless equipment in our school for almost a full 5 years now. 1 ZoneDirector 1200, 21 R500 and 9 R510 units power the Wi-Fi in our school. From day 1, I have never had any hardware issues with the equipment and software wise, the system has largely just worked. I’ve updated the firmware on the ZoneDirector a number of times and it’s always been smooth sailing, until the 10.4.0.70 update I applied a few months back.

All of a sudden, the access points were randomly rebooting with no discernible pattern. Whether it was 2AM or during the middle of the day, the AP’s would just spontaneously reboot, taking the whole wireless network down with them. During this time the ZoneDirector itself remained functional however.

After doing what little debugging I could on my own, I opened a support ticket with Ruckus. My users were not happy with the reboots, as due to the random nature of the reboots, it could strike at any time. I also had one user vociferously complaining about poor signal, which despite my assurances had not changed, she remained convinced that signal was weaker after the update – this is very much a case of correlation and causation getting mixed up in her head.

My assigned engineer first asked me for logs, then had me downgrade from 10.4.0.96 to 0.70, which didn’t help the reboots much. After that I had to verify my network layout for no reason I could understand. Lastly, I provided SSH and web access to the ZoneDirector as well as just SSH to 2 access points, one powered by PoE, one by an injector. The engineer pulled whatever he needed, but then went quiet for a bit with no promised update.

At this point the reboots were untenable, so I informed him I would be downgrading back to the 10.2 firmware branch. I performed the downgrade on a Friday afternoon, which took about 10 minutes and thankfully kept all my settings – I was worried about having to set the controller back up from scratch or needing to use a backup of the config file. The AP’s pulled in the downgraded firmware and rebooted themselves.

3 plus weeks later, the wireless network is behaving itself 100% with the kind of Ruckus stability I have grown to love. After informing my engineer to close the ticket as the problem was resolved, he came back to me to inform me that they are admitting there is a bug in the 10.4 branch, as other customers had ended up reporting the same issue. Their coders would be working towards getting that resolved and patched for a future release.

I suppose this just goes to show that no vendor has such thing as a perfect firmware record – the room for bugs is always present. I could have ended our user misery by downgrading a lot sooner than I did, but having opened a ticket, I wanted to hold off as long as possible so that I could hopefully get a positive result. Failing that, I wanted to be able to help provide Ruckus with enough info so that they could use that for a fix which would benefit all their clients, not just us.

Still, this was the first time that I can recall that I’ve had firmware cause such a visible impact on a service offered on the network. The only other incident I can even remotely think of is when I updated the firmware on one of our servers years ago, only to have the system fans spin up to full blast, as it turned out that they hadn’t been plugged into the correct fan headers – the actual firmware however did not prevent the server itself from running normally in any other way. No other firmware update I’ve ever performed otherwise has had an impact the way this one from Ruckus did. I am just glad that the issue is resolved and I will perhaps wait a bit longer in the future before I semi-rush into deploying firmware updates.

Huawei B525 mini review

For the last couple of years, my home internet connection has been powered by a Telkom LTE connection. After many years of ADSL going absolutely nowhere, we needed more speed and a cost reduction. Telkom was aggressively courting users with their LTE bundles, so we dropped ADSL and went the LTE route instead. Telkom supplied a Huawei B315 LTE router as part of the bundle and the device pretty much did its job without much fuss over the years.

Last year the contract came up for renewal, which we decided to do after a few months of running month to month after expiration. Fibre is not available in our area at the moment and the new LTE offer was cheaper than before. We renewed the contract and Telkom supplied a Huawei B525 unit as part of the deal. I tried telling the sales rep that we didn’t need a new device as the B315 still worked fine, but we had no option but to take the device in the end.

Up until yesterday, the B525 has sat in the box unused. However, increasingly sluggish LTE speeds from the B315 made me decide to try the new unit and see if performance would improve. The swap over was pretty straight forward, as the units are very alike. The biggest differences really are that the B525 doesn’t come with external LTE antennae in the box, nor does it come with a battery backup unit. Apart from that, it looks like a larger version of the B315.

Internally the B525 now offers 802.11 AC wireless, which is very welcome bump over the B315’s 802.11 N maximum – primarily the fact that AC runs in the far less crowded 5GHz range. I can’t comment on the performance of the ethernet ports nor the media sharing capabilities of the device, as only 1 PC is connected via ethernet and I’ve never used the USB port on the device.

Reconnecting all our devices to the new router wasn’t hard and before long everything was up and running. The web management interface is essentially identical to the B315, with only some very minor tweaks and items moved around in the B525 interface.

Wi-Fi performance seems nice and solid so far and having devices segregated by frequency helps keeps things balanced. LTE performance does appear to be a bit improved over what the B315 was doing, but it’s still not where it used to be when we first started using LTE at home. I don’t know if the ongoing national lockdown is putting more strain on Telkom’s cellular network or if they are just capacity starved these days but it is definitely not as fast and snappy as it used to be – this is borne out in multiple SpeedTests.

For the average home user, the B525 does the job well enough. Enough features keep it relevant and the device seems to be pretty stable. For more enterprise or advanced home use the device will fall short as it lacks features like bridge mode and more fine grained control of the features. Firmware updates also appear to be pretty non existent, which is never a good thing when new vulnerabilities appear every other day.

The long road to Fibre is finally completed

Around July 2016, our school applied for a 100mb/s fibre optic internet connection. We couldn’t cope on the tired old speed deprived ADSL any longer and a brief opening up of our Wi-Fi to staff immediately killed all bandwidth to the desktop computers. While the cost would go up quite a bit, the benefits were deemed enough to outweigh the cost concerns.

After the contract was signed, the wait began for the fibre infrastructure to be built and brought into the school. Around November 2016, a site survey was completed and the build route laid out. Plans were formally drawn up and submitted and we hoped that construction would be starting late January or February 2017, so that we could go live before the end of the first term 2017. Sadly, this never happened.

What did happen was a long descent into utter frustration as we played the waiting game. The contractors who were building the link, Dark Fibre Africa, seemed to hit every possible brick wall and bureaucratic pitfall possible. One delay after another saw months slip away a lot like sands through the hour glass (so are the Days of Our Lives)

The excuses and explanations stayed largely the same however, that the real hold up was the City of Cape Town. Eventually it got to a point where I had to call in the ISP to have a meeting with the principal of our school, as he wanted answers. The ISP and an account manager from DFA came in and placated the principal somewhat, but they couldn’t give him anything more I hadn’t already told him. This was frustrating because in the end, it reflected poorly on my annual performance appraisal.

It actually got the point where in November 2018, we gave notice to cancel the contract, as no construction had started and we were tired of waiting. During the waiting period, we’d contracted to a wireless ISP who helped us a lot, but by the end of our time with them were suffering majorly degraded speeds, not to mention that the bandwidth wasn’t guaranteed and was shared with a neighbouring primary school.

I’m not sure whether the threat to cancel or if the ducks were all lined up in a row by that time, but the ISP pleaded with us not to cancel as DFA were ready to begin the build. We were sceptical in the school, but decided to give it one last shot at redemption. From experience, we knew that applying for fibre with a new ISP would start the cycle of wayleave issues and permitting problems all over again, whereas the existing ISP had fought through all of that already.

Lo and behold, construction began in late November 2018 and moved at a rapid pace. I was completely surprised at how fast DFA and its subcontractors were able to move, but before the school closed on 15 December, all the construction work was complete. The trenching, ducting, manholes and all the rest were complete. Now it was just a case of blowing the fibre down the tubes and splicing us into the network in the new year.

Sure enough, during January this year the remainder of the work was completed. Technicians came to blow the fibre, another came to terminate the fibre into a splice tray. Finally DFA came with their layer 2 media converter/CPE unit and put that in. The device essentially converts the fibre into Ethernet, but also does some sort of management on the line I assume. Configuration details were sent to me, which didn’t work initially. It was also interesting in that the connection was completely IP based, no dialling up or router needed. Just set your switch or firewall to the same VLAN as the connection and the rest was simply configuring your IP address and default gateway.

A day of troubleshooting later and it turns out that I was given the wrong address for the default gateway – that had been transposed with the network address actually. A simple enough mistake and once the correct value was punched into my firewalls, internet flowed. Come Monday 28 January, I started switching traffic over to the fibre and by end of day, everything was now running over fibre. Solid, stable and fast, the connection has not had an issue since.

The wait was long and 100mb/s is no longer quite as speedy as it once seemed, but I now have an incredibly stable connection that I can rely on. No interference on Wi-Fi spectrum, no Telkom exchange issues with ADSL or wet copper lines, just bliss and peace of mind really. It was worth the wait in the end and best of all, it just takes an email to the ISP to boost the speed of the connection upwards. No other install, no new equipment, just a quick mail or phone call to make the change. Internet life at work is now bliss!

MDT with no working USB system

MDT uses Windows PE to boot a PC and perform the first stages of an install. Windows PE is built from the latest edition in the Windows ADK, which is now at a version supporting Windows 10 1803. As such you would expect there to be a pretty stellar support out the box for well established and older hardware, but this isn’t always the case.

Recently I was working with some 8 year old PC’s that have an Intel P35 chipset in them, using the ICH9 southbridge. The MSI Neo P35 boards had 1 BIOS update before the boards were promptly forgotten by MSI. USB options in the BIOS include turning the controller on and off as well as enabling/disabling Legacy support – that’s it.

When booting the PC, the keyboard and mouse work fine in the BIOS as well as on the option ROM screens to select network boot, F12 to enter MDT etc. The problem came in that as soon as I got into MDT, the keyboard and mouse vanished. Unplugging and re-plugging the devices didn’t help, nor did switching ports. Unfortunately I no longer have any PS/2 port based mice or keyboards, so I was stuck and unable to continue the MDT task sequence. Disabling USB Legacy support simply meant that the keyboard stopped working for the option ROM and network booting screen, so I couldn’t even enter MDT in the first place.

There wasn’t a huge amount of info on the net, but it seemed to be related to the drivers in use for the USB controller. I tried deleting the driver in MDT and then rebuilding the boot image, but my first attempt had no luck – keyboard and mouse still vanished once in MDT. I then built a “clean” boot image with no additional drivers in it bar what is built into Windows PE and this time I had mouse and keyboard working right away. Yay, problem solved I thought.

Wrong. Once Windows was installed, my mouse and keyboard refused to work and I was unable to interact with Windows at all. Changing keyboards and ports did nothing. Eventually I used Remote Desktop to connect to the PC and looked at Device Manager. Sure enough, there were yellow bangs on most of the USB controller entries, so the actual ICH9 controller wasn’t fully installed by the drivers in Windows 10.

I found a zip on Intel’s site for their chipset/INF installer, dated early 2010’s, but the actual INF driver for the ICH9 USB controller dates from 2008. These installed perfectly on Windows 10 and enabled me to use the PC.

My next attempt saw me take these INF files and add them to MDT’s driver pool, then build new boot images using these drivers. Lo and behold, not only did Windows PE behave on boot, the finished Windows 10 install worked correctly as well.

What puzzles me is that with my first attempt, I already had ICH9 drivers in the boot image, but these dated from 2013. There must have been some sort of bug in these drivers during use in Windows PE, so I simply reverted to the older 2008 ICH9 USB drivers – it’s only 2 files in total that need to injected.

In the end, older hardware is going to have odd little quirks like this that eat up time and cause puzzlement while you try and figure out what the problem is. Of course, newer hardware isn’t exempt either – Intel’s I219-V network card has a bug that prevents PXE booting and not all motherboard manufacturers released UEFI updates to fix this issue as they probably didn’t deem it worth their time to do so.

Going down the rabbit hole or 802.11r

If there’s one thing that will get under my skin and irritate me to no end, it’s a problem that I can’t figure out or fix, yet it seems I should be able to. Case in point is a situation I was in recently at work. Ever since implementing 802.1x authentication on our Wi-Fi this year, staff and students have been able to sign into the Wi-Fi without needing to know a network key or sign into captive portals or any other methods of connecting. WPA Enterprise was made for exactly that sort of situation, even though it does take quite a bit of work to get set up.

One staff member however could not connect to Wi-Fi no matter what we tried. We knew it wasn’t a username and password issue, but his Samsung Galaxy J1 Ace simply refused to connect. After entering credentials, the phone would simply sit on the Wi-Fi screen saying “Secured, Saved.” For the record, the J1 Ace is not that old a phone, but it came with Android 4.4.4 and has never been updated here in South Africa. Security updates also ceased quite a while ago. There is a ROM that I’ve seen out there for Android 5, but since I didn’t own the phone I didn’t want to take any chances with flashing firmware via Odin and mucking about with something that clearly was never supported here locally.

My first thought around the connectivity issue is that the phone had a buggy 802.1x implementation and couldn’t support PEAP or MSCHAPv2 properly. Connecting the phone to another SSID that didn’t use 802.1x worked fine, which indicated that the hardware was working at least. I gave up eventually and told the staff member that the phone was just too old to connect. He accepted this with good grace thankfully, but it was something that gnawed away at me, wondering what the issue was.

A few weeks ago, a student came to me wanting to connect to the Wi-Fi. Lo and behold, she had the exact same phone and we had the same situation as before. However, now I got frustrated and I was determined to find out what the problem was. Most of my internet searches came back with useless info, but somewhere somehow I came across an article that talked about how Fast BSS Transition had issues with certain phones and hardware. Fast BSS Transition is technically known as 802.11r and in a nutshell it helps devices to roam better on a network, especially in a corporate environment where you might be using a VOIP app that is especially sensitive to latency and delays while the device roams between access points. It’s been a standardised add-on to the Wi-Fi standards for a good few years, so a device from 2015 should have been just fine with supporting it!

Borrowing the staff member’s J1 Ace, I disabled the 802.11r and 802.11k options on the network. His phone connected faster than a speeding bullet it seemed! That adrenaline rush was quite pleasant, as I now finally knew what the issue was. I enabled 802.11k and the phone still behaved, which meant that the culprit was 802.11r. The moment that was enabled, the phone dropped off the network.

My solution to this problem was to clone the network in our Ruckus ZoneDirector, hide the SSID so that it’s not immediately visible and disable 802.11r for this specific SSID. Once completed, the teacher was connected and has been incredibly happy that he too could now enjoy the Wi-Fi connection again.

My theory is that some combination of wireless chip, chip drivers, Android version and potentially the KRACK fix on our network caused the J1 Ace to be unable to connect. It could be that while it does support 802.11r, when the KRACK fix has been installed on your network the phone cannot connect since it hasn’t been patched since before KRACK was revealed and now doesn’t know how to understand the wireless frames on the network post KRACK fix. Since the phone is never going to get any more support, the only answer is to run a network without 802.11r support for these kinds of devices.

It makes me angry that this kind of thing happens with older devices, this is also related to Android itself, but that is a topic for another post entirely.

The road to DMARC’s p=reject

DMARC is sadly one of the more underused tools out there on the internet right now. Built to work on top of the DKIM and SPF standards, DMARC can go a very long way to stopping phishing emails stone cold dead. While SPF tells servers if mail has been sent from a server you control or have authorised and DKIM signs the email using keys only you should have, DMARC tells servers what do when a mail fails either DKIM, SPF or both checks. Mail can be let through, quarantined to the Spam/Junk folder or outright rejected by the recipient server.

Since moving our school’s email over to Office 365 a year ago, I have had a DMARC record in place. I have had the record set to p=none, so that I could monitor the results over the course of time. I use a free account at DMARC Analyzer to check the results and have been keeping an eye on things over the last year. Confident that all mail flow is now working properly from our domain, I recently modified our DMARC record to read “p=reject;pct=5”. Now mail is being rejected 5% of the time if a destination server does checks on mail coming from our domain and the mail fails the SPF and DKIM checks. 5% is a good low starting point, since according to DMARC Analyzer, I have not had any mails completely fail DKIM or SPF checks in a long time. Some mail is being modified by some servers which does alter the alignment of the headers somewhat, but overall it still passes SPF and DKIM checks.

My next goal is to ramp up that 5% to 20% or 30%, before finally removing the pct variable completely and simply leaving the policy as p=reject. Not only will I be stopping any potential phishing incident arising from our school’s domain, I am also being a good net citizen in the fight against spammers.

Of course, this doesn’t help if a PC on my network gets infected and starts sending mail out via Office 365, as then the mail will pass SPF and DKIM checks and will have to rely on being filtered via the normal methods such as Bayesian filtering, anti-malware scans etc. That is the downside of SPF, DKIM and DMARC, they can’t prevent spam from being sent from inside a domain, so domains still need to be vigilant for malware infections, bots etc. At least with the policies in place, one avenue for spammers gets shutdown. As more and more domains come on board, spammers will continue to get squeezed, which is always a good thing.