Archive

Updating the firmware on Ricoh MFD’s

In the not so old days, getting firmware updated on your multi-function devices a.k.a the photocopier required booking a service call with the company you leased the machine from, then having a technician come out with a SD card or USB stick and update the firmware like that. It was one of those things that just wasn’t done unless necessary, as by and large the machines just worked. Issues were usually always mechanical in nature, never really software related, so the need to update firmware wasn’t a major issue per se.

That being said, modern MFD’s are a lot more complex than machines from a few years ago. Now equipped with touch screens, running apps on the machine to control and audit usage, scanning to document or commercial clouds and more, the complexities have increased dramatically. Increased complexities means more software, which in turn requires more third party code and libraries. If just one of those links in the chain has a vulnerability, you have a problem. Welcome to the same issue the PC world has faced since time immemorial.

I can’t state as to what other manufacturers are doing, but I discovered that Ricoh bit the bullet and made a tool available to end users a few years ago so that they can update the firmware on their MFD’s themselves, without requiring a support technician to come in and do it. This saves time and money and also helps customers protect themselves since they can upgrade firmware immediately rather than waiting on the tech to arrive. Some security threats are so serious that they need urgent patching and the best way to get that done is make the tools and firmware available.

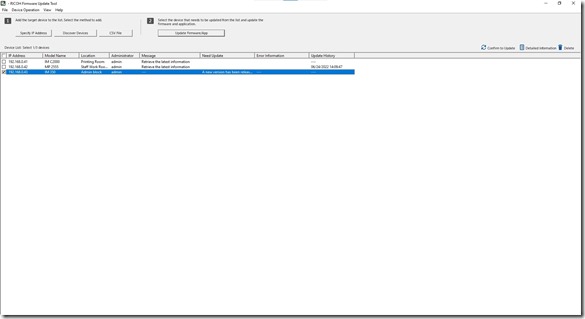

Enter the Ricoh Firmware Update Tool:

The tool is quite simple to use, though it is Windows only at this point in time. Either enter in your MFD’s by hand, or let the tool scan your network for compatible devices. Ricoh has a listing of supported devices here, which is a rather nice long list. I would imagine that going forward, all new MFD’s and networked printers released by Ricoh will end up being supported as well. I can understand that older machines won’t be on that list as they weren’t designed with that sort of thing in mind.

One the device(s) has been discovered, you let the tool download info from Ricoh to determine what the latest updates are. The tool will tell you under the Need Update column if an update is required or if the machine is on the latest version already.

Once you are ready, you click on the Update Firmware/App button, which brings up the next screen:

You can click on a device and click Detailed Information to see more info about the copier including the existing versions. Clicking on Administrator Settings lets you change whether to just download the firmware into a temp folder, download and install in one go, or install from a temp folder, downloaded from another PC perhaps. Once you’ve made your choice there, clicking the Execute button will start the update process. The tool offers solid feedback at every stage along the way. The firmware is download and pushed to the machine(s), where they then proceed to digest and install the firmware. There’s a number of steps that happens on the devices themselves and it can take between 30 minutes to an hour per machine to complete.

Once completed, you can see the results by clicking on a device in the main window and selecting Detailed Information. This will bring up this final window which contains a change log of what was updated:

As you can see above for our MP 2555 device, the update was pretty substantial. This makes sense as we’ve had this copier for almost 5 years now and it’s never been updated before this. 3 out of the 4 devices on the network needed to be updated, which has since been completed. The process was painless, if just time consuming – there are a lot of different parts of a MFD that needs attention so it’s understandable in the end. MFD’s have become a bigger security issue over the years and making firmware updates available for end users to do it themselves is a big step forward in the right direction. I hope that all manufacturers in this space end up doing the same thing as quite frankly having a tech come out to update firmware is a very old and inefficient way of doing things in this modern connected world of ours.

Gigabyte Q-Flash Plus to the rescue

This past week, I built yet another new computer for my school. Nearly identical to the last build that I did for the school, the main difference was that I went with a Gigabyte B550M Gaming motherboard instead of an Asus Prime motherboard. Why? Simply put, to avoid the issue I had last time where the motherboard wouldn’t post with the Ryzen 5000 series CPU in it. The crucial difference between the two motherboards is that Gigabyte have included their hardware based Q-Flash system on the motherboard, which lets me update the UEFI even if the UEFI is too old to boot the new generation CPU’s.

That little button on the motherboard is a life and time saver. Of course, the concept of this isn’t exactly new – Asus had a feature like this on their higher end motherboards a decade ago already. However, it’s one of those absolutely awesome features that have taken a long time to trickle down to the budget/entry level side of things and to this day, many motherboard still don’t sport this essential feature even though it would drastically improve the life of someone building a computer. There’s nothing quite like spending time building a PC, getting excited to hit the power button and all of a sudden seeing everything spin up but output no display signal. That scenario makes you start to question your sanity.

So what does it do? Simply put, whilst your motherboard may support a new generation of CPU’s, it more than likely requires a new firmware to do so. This becomes a chicken and egg situation whereby you buy the motherboard and CPU, but the motherboard came from the factory with the older firmware on it and as such can’t boot your new CPU. In the past, the only way to get around this was to use a flashing CPU, i.e. a CPU that the motherboard supports out the box and flash the firmware, remove the flashing CPU and then put in your new CPU. This of course works, but is tedious and does increase the risk of damages in the sense that you are now doing twice the amount of CPU insertion and removal.

Q-Flash Plus and other systems like it basically let you flash the firmware, even if your system has no CPU, RAM or other components installed. I used it to great success this week to get the new motherboard flashed to the latest firmware. The process is pretty easy and goes like this:

- Connect motherboard to your power supply correctly, so 24 pin and 8 pin connectors.

- Use a smaller capacity flash drive. Format it with FAT32 and place the latest firmware on the drive. Rename the file to GIGABYTE.bin

- Place the flash drive into the correct port – this is usually just above the button, but check your motherboard manual to be sure.

- Press the button once and sit back. The LED indicator near the button should start rapidly blinking, as should the USB flash drive if it has an indicator light. After a few minutes, the PC powers up whilst the Q-Flash indicator blinks in a slower pattern.

- When done, the PC in my case restarted itself and posts properly.

In my case, the PC restarted after the flash completed, but I already had all the components installed. Others flash the system completely bare, so in that case the motherboard may just turn off when complete. This isn’t something I have a lot of experience with, so your results may vary.

After using this to get the new PC up and running, I’ve come to the conclusion that it is a feature all motherboards should have, no matter what level they occupy. It’s one of those features that is just too handy, too useful to be reserved for higher end motherboards only. It no doubt does add some expense to manufacturing, but again, it’s one of those things that is just too handy to have.

Disabling POP3 and IMAP4 protocols in Exchange Online

POP3 and IMAP4 are some of the distinguished elder citizens of the internet when it comes to protocols. Used for retrieval of mails from a mailbox to a mail client of some sort, both protocols are available on just about every mail server software package for the last 30 years. Unfortunately the protocols themselves are no longer secure for use in this modern world and it’s all but guaranteed we’ll never see another version increment of either protocol again. The world has changed since those early days when it comes to mail access, driven by players such as Google’s Gmail.

Our Exchange Online instance has had POP3 and IMAP4 enabled since the day I signed up for it in 2012 or so. Our on premises Exchange 2007 had both protocols enabled as well, though no user made use of them internally or externally. Thanks to COVID-19 and the whole remote work push, many more of our learners have started to use their school email accounts as they need it for MS Teams and other services. Unfortunately some of the learners did not make use of the excellent and free Microsoft Outlook app for their phones or tablets but instead made use of the built in mail clients on these devices. For some arcane reason, a very small handful of these devices configured themselves automatically to use IMAP4 for mailbox access. I don’t publish DNS entries for POP and IMAP, so how these clients configured themselves for IMAP4, I’ll never know.

Ordinarily I wouldn’t be too bothered about the access method users used. However earlier this year I came across a note in the Office 365 admin console that explained that Microsoft would be disabling “Legacy” authentication for these protocols – basically disabling the clear text and TLS sign in ability and requiring clients to use OAUTH authentication on these old protocols if I understood correctly. The main reason for the switch is security – as mentioned both protocols are old and are not going to be receiving much TLC going forward.

Further reading opened my eyes to the fact that both old protocols could also be used as a brute force attack method to try and turn a user into a SPAM bot. If you could guess a user’s password and confirm that it works by signing in over either protocol, you could then use that user to blast out SPAM via an automated API that connects to your tenant and blasts away. This has happened to us a few times where an automated attack script has attempted to brute force guess one of our users’ passwords and managed to turn said user into a SPAM bot.

I therefore decided to kill both POP3 and IMAP4 for all of our users, staff and learners alike. If we were a small business with only 5-20 users, one could disable the protocols for each user by hand in the Exchange Management Console, but for 1000+ learners this isn’t realistically possible. This leaves using good old PowerShell to do the job.

To disable the protocols, make sure you have the Exchange Online module installed, as the cmdlets used are from this module.

(Get-CASMailbox -Filter {ImapEnabled -eq "true" –or PopEnabled –eq “true”} -ResultSize Unlimited).count

The above command will return a number that indicates the number of users that have either one or both protocols enabled.

Before you actually disable the protocols though, here’s the thing: you ideally want to prevent any future users accounts that are created from automatically getting POP and IMAP enabled. Users can still be enabled manually should you need it for any given reason, but ideally the idea is to kill it for good going forward.

Get-CASMailboxPlan -Filter {ImapEnabled -eq "true" -or PopEnabled -eq "true" } | set-CASMailboxPlan -ImapEnabled $false -PopEnabled $false

The above command will permanently disable creating new mailboxes with POP and IMAP enabled by default.

To disable POP and IMAP for all your users, use the following command:

Get-CASMailbox -Filter {ImapEnabled -eq "true" -or PopEnabled -eq "true" } | Select-Object @{n = "Identity"; e = {$_.primarysmtpaddress}} | Set-CASMailbox -ImapEnabled $false -PopEnabled $false

The above command essentially finds all the users with POP and/or IMAP enabled, selects their Identity and email address and pipes each user to the last command which actually disables the protocols on the mailboxes.

Now any attacker trying to worm their way in via either protocol so that they can send SPAM will hit an impenetrable wall. That’s one less attack avenue to worry about which is always a good thing. Clients won’t notice either so long as they are using a modern mail client or signing into Outlook Web App to check their mail. Win win, SPAMMERS be damned.

Powershell the time saver

One of the subjects our school offers is called CAT, short for Computer Applications Technology. As part of the subject, there are usually practical examinations for grades 10 – 12 in May/June, grade 12 in September, finals for grade 12 in October and finals for grades 10 and 11 in November. New exam user accounts are created especially for each exam as it gets the learners into the habit of what ultimately the final grade 12 exam is like.

With dedicated user accounts set up, each account requires a copy of the source folder of items (documents, spreadsheets, databases etc) that the learners will be attempting during their exam. Since each account has a home drive mapped to their H drive, this source folder has to be copied to each individual folder that makes the home drive so that each user has their own unique copy.

Previously I would manually copy and paste the source folder into each home folder by using Windows Explorer. Slow, repetitive and occasionally open to mistakes due to a lapse in concentration, especially if you are copying the folder to 50+ directories.

Once the exam starts, the learners rename their source folder to something that is announced before the exam so that the teacher can later know which folder belongs to which learner. After the exam is complete, either the teacher or myself would do a search in Windows Explorer for those folder names and if we were lucky and got all the kids, copy that to a master folder.

Until this year, this long winded procedure was the norm. Thanks to learning about the power of PowerShell over the last year, I dug in to see if there wasn’t a faster, more efficient way to copy the source folder before an exam and copy the modified answer folders after the exam was complete. Lo and behold, it literally takes one line either way to accomplish either task.

To copy the source folder to each individual home folder:

Get-ChildItem -path ‘\\servername\examusers\finals’ -Directory | ForEach{Copy-Item ‘C:\Users\username\Desktop\Exam\CAT P1 2020_MSOffice_DATA’ -Destination $_.Fullname -Recurse -force}

The above line of PowerShell basically goes through the specified directory and looks at each sub directory, which is how home folders are usually set up. It then pipes through those results to copy-item, which then copies the source folder to each sub folder in the specified directory and copies the individual files inside the source folder as well. It repeats this process until the source folder has been copied to every sub directory inside the specified top level directory.

To collect the modified folders after the exam is done:

Get-ChildItem -path ‘\\servername\examusers\finals’ -Directory -Recurse -Filter 1200* | ForEach{Copy-Item $_.FullName -Recurse -Destination C:\Users\username\Desktop\12exams}

With this line of code, PowerShell is scanning through the specified location, is being told to only look for folders, recurse to go through every sub folder and to filter so that only folders starting with the name 1200 will be copied. From there it is being piped through copy-item which is copying the name of the sub folder and contents to the specified directory which becomes the compilation folder containing all the answer folders from the home drives of the learners.

I intend to experiment to see if I can make things a little fancier and build the single line into a little script that includes a progress bar and can take some variables etc just to help save even more time.

Another example of why PowerShell is so powerful and flexible. I’m still far from comfortable or fluent, but every little step I can take to help automate things and make my life easier is a win in my eyes!

Repairing unbootable swapped Windows 10 SSD

As mentioned in my last post, I swapped out a SSD from a dead 4th gen Core i3 Box into a 3rd Gen Core i3 box. Normally, I would expect such a swap to just work and the system to boot, especially since both drives were GPT formatted and the systems had been configured to boot in UEFI mode. The UEFI on the 3rd gen box should have seen the EFI boot partition on the drive and should have booted Windows. Unfortunately this was not the case, as the UEFI simply didn’t see the drive as bootable. I don’t know if this is because the 4th gen motherboard was a Gigabyte model and the 3rd gen an Intel model.

This was annoying, as I did not want to have to install Windows from scratch. I decided to try and fix the problem, as it would be quicker than backing up the data, installing and configuring Windows again. Using the ever excellent Rufus, I built a Windows 10 1809 flash drive and booted the PC.

Choosing to repair the PC, I first tried to use the automatic repair option. This ran but was unable to repair anything. Next, I went to the command line. Issuing a “bootrec /fixmbr”, “bootrec /fixboot” and “bootrec /rebuildBCD” made no difference. Whilst the rebuildBCD and fixmbr options worked, fixboot failed with access denied errors and rebuildBCD failed to find a Windows install.

Rebooting the PC after trying the above options failed, as there was no new UEFI boot entry, keeping the PC unbootable.

After turning to the almighty internet, I came across the following which did the trick in the end for me:

- Using Diskpart, assign a drive letter to the FAT32 EFI partition.

- Format said partition with FAT32, making it clean and fresh.

- Rebuild the BCD using an entry on the command line.

Steps in detail are as follows:

- Boot the PC using your Windows install flash drive. Chose to repair your computer and chose the option to use the Command Prompt.

- Run Diskpart. Type in “lis vol” to list the volumes on the PC. You are looking for the volume that is between 100-500MB in size and is formatted with the FAT32 file system. It may be labelled “BOOT”

- Type “sel vol x” where x is the number of the volume from step 2.

- Type “format quick fs=fat32”

- Type “assign letter=X” where X is the drive letter you want.

- Exit Diskpart back to the command prompt.

- Type “bcdboot C:\Windows /s X: /f UEFI” where X: is the drive letter from step 5.

Once you are done, exit the recovery environment and reboot. If all went well, the Windows Boot Manager should be registered with the UEFI of the PC and Windows should boot itself up. The EFI partition should not be visible in Windows after it has booted.

I seem to recall reading that the bcdboot command had changed from earlier Windows 10 versions, but I would suggest using the above commands as it is what is listed on the Microsoft Docs help page.

Downloading your SparkPost suppression list

One of the tools any bulk mailing service uses to maintain good list hygiene is a suppression list. This list contains bad email addresses, addresses that have repeatedly bounced or have other errors that if mail were allowed to be sent to those addresses, would bounce or classify the sender as a spammer.

Inside the SparkPost web console, you can search for individual email addresses to remove them from the suppression list. Unfortunately there isn’t a way to download a CSV file containing the entire list in the console. Thankfully you can download it via the command line, but help documentation only mentions curl – presumably the assumption being here that you are using macOS or Linux/BSD to manage things, or have access to the Unix tools. curl is available for Windows, but I wondered to myself if one could do the same thing in PowerShell. It took a while and a lot of mistakes, but I put together a script that does the same thing as the curl version and as a bonus converts the downloaded JSON formatted file into a nice CSV file for further analysis in a tool like Excel.

Copy and paste the following code into a text editor or the PowerShell ISE and save it with a .ps1 extension. You may need to change your script execution policy to bypass to run this script if you haven’t previously done so.

<#

NAME

SparkPost suppression listAUTHOR

Craig MurraySYNOPSIS

This script will download the SparkPost suppression list for your account in JSON format, then convert it to CSV format and delete the JSON file.DESCRIPTION

I wrote this script because basically everything on SparkPost’s website and help documentation assume you are using macOS or a Linux distro with access to Unix tools.

It took a while but I managed to get this script up and running with a lot of reading up online.

The OS Version check is in place due to Windows 7 and 8 still supporting SSL in PowerShell, which will fail to connect to SparkPost’s site.

I am not experienced enough to understand why it doesn’t negotiate using TLS first, but the code below takes care of that. On Windows 10 out the box this isn’t an issue.USAGE

Change the "XXY" text next to "Authorization" with your own valid API key for your account. You can also modify where the file ends up being saved though I find the

Desktop to be easiest and quickest place to save output file.VERSION HISTORY

1.0 05 April 2020 – Initial releaseLICENSE AND DISCLAIMER

You have a royalty-free right to use, modify, reproduce, and distribute this script file in any way you find useful, provided that you agree that the

author above has no warranty, obligations or liability for such use.#>

$UPD = "$($env:USERPROFILE)\Desktop"

$OSV = (Get-CimInstance Win32_OperatingSystem).version

If ($OSV -lt "10240.16405")

{

[Net.ServicePointManager]::SecurityProtocol = [Net.SecurityProtocolType]::Tls12

}

iwr -Headers @{‘Authorization’ = ‘XXY’} https://api.sparkpost.com/api/v1/suppression-list/ -OutFile $UPD\supplist.json

((Get-Content -Path $UPD\supplist.json) | ConvertFrom-Json).results | Export-csv -Path $UPD\Supplist.csv -NoTypeInformation

Remove-Item $UPD\supplist.json

I’m still very much an absolute newbie to the power and possibilities of PowerShell, but I hope that this could help someone out there.

And the Dead did rise…

A week ago, I finally took possession of my little USB powered CH341A chip programmer. From order to delivery it took about 2 and a half weeks, which wasn’t too bad all things considered.

As mentioned in a previous post, this device was going to help me save the Zotac ZBOX that locked up when flashing its firmware. After my programmer arrived, I popped off the lid of the ZBOX, removed the BIOS chip from its socket and put it into the programmer, following the diagram silk screened on the side of the device. Long and short of it, SPI flash chips go in the top half of the programmer, closest to the USB connector, with Pin 1 pointed down towards the bottom of the programmer where the socket lever is.

After installing the driver on my PC, I inserted the programmer with chip in it. Detected right away and was visible in the programming software – CH341A programmer v 1.18. The SPI flash chip was identified immediately and I proceeded to erase the chip first, as the flash had only been partial. Once the chip was erased, I loaded up the firmware and hit the program button. About 90 seconds later, the chip was done and ready to go. I verified the flash and the software said everything was in order.

The flash chip went back into its socket on the ZBOX motherboard. I connected it up to a monitor and keyboard/mouse combo and after crossing fingers, hit the power button. Sure enough, the device posted! No error beeps this time around. After entering the firmware and configuring the settings to how they should be for UEFI booting, I let the box boot into Windows. Sure enough, it did so and just like that, the job was done. The box was back from the dead and would now be sent to live out its life powering the TV in our reception area.

I’m really glad I have the tool as it’s an important part of my toolkit, but unless something goes drastically wrong in the future, it’s the kind of tool one isn’t going to need very often unless you are a firmware hacker/tinkerer/board repair shop. Of course the tool won’t work with the older PLCC socketed chips, but if one is working on boards that old that still use those kinds of chips, then that is really old hardware by now and thankfully there are programmers out there that can still cater to those types of sockets.

MBR 2 GPT – What a tool!

Back with the Windows 10 1703 release, Microsoft released an amazing little tool that I didn’t pay much attention to: MBR2GPT.exe. Built into that edition and also Windows PE versions from then on, the tool is simple and does one primary job – converting the MBR disk layout format to GPT layout and essentially converting Windows into an UEFI install after the next reboot.

Why is such a tool useful you may ask? Simply put, there are production computers out there running Windows 7 on UEFI capable hardware that haven’t yet been upgraded to Windows 10 for some or another reason. It may be software that has to be relicensed or the company providing the software went out of business and now the software is mission critical. Maybe it was the time factor of backing up the user profile, nuking and installing Windows 10 and then restoring the user profile.

With this tool, it’s now quite easy. Upgrade the existing Windows 7 MBR/Legacy BIOS install to Windows 10 MBR/Legacy BIOS. Run the tool, reboot into the firmware and disable the CSM so that you can have a pure UEFI boot. Turn on Secure Boot as well and reboot, then magically watch Windows come back up without any data being lost at all, not to mention being that bit more secure now with Secure Boot enabled.

Whether you upgrade via ISO image or Deployment Toolkit, this little tool will help you convert those Windows 7 computers without the full nuke and pave scenario chewing up time. Since the base Windows is being replaced, the upgrade should largely be as stable as a fresh install, especially since there are a few drivers that will need to be replaced or reinstalled after the upgrade.

I have got some computers I will be doing this procedure on shortly at school, which will help increase my Windows 10 numbers even more.

To run the tool, all you need to do is run the following command from an Administrator Command Prompt:

mbr2gpt.exe /validate /allowFullOS to validate that your drive can be converted

mbr2gpt.exe /convert /allowFullOS to actually do the conversion

Once you reboot, make sure that you disable the CSM in the firmware and that UEFI booting is enabled as the default or the computer will fail to boot.

Last but not least, your computer should be capable of UEFI booting. This should work in theory all the way back to Intel’s 5 series chipset, although you will be better off with a minimum of the 7 series chipset, since those supported Secure Boot. The 6 series chipset works, but lacks configuration options and Secure Boot.

MDT with no working USB system

MDT uses Windows PE to boot a PC and perform the first stages of an install. Windows PE is built from the latest edition in the Windows ADK, which is now at a version supporting Windows 10 1803. As such you would expect there to be a pretty stellar support out the box for well established and older hardware, but this isn’t always the case.

Recently I was working with some 8 year old PC’s that have an Intel P35 chipset in them, using the ICH9 southbridge. The MSI Neo P35 boards had 1 BIOS update before the boards were promptly forgotten by MSI. USB options in the BIOS include turning the controller on and off as well as enabling/disabling Legacy support – that’s it.

When booting the PC, the keyboard and mouse work fine in the BIOS as well as on the option ROM screens to select network boot, F12 to enter MDT etc. The problem came in that as soon as I got into MDT, the keyboard and mouse vanished. Unplugging and re-plugging the devices didn’t help, nor did switching ports. Unfortunately I no longer have any PS/2 port based mice or keyboards, so I was stuck and unable to continue the MDT task sequence. Disabling USB Legacy support simply meant that the keyboard stopped working for the option ROM and network booting screen, so I couldn’t even enter MDT in the first place.

There wasn’t a huge amount of info on the net, but it seemed to be related to the drivers in use for the USB controller. I tried deleting the driver in MDT and then rebuilding the boot image, but my first attempt had no luck – keyboard and mouse still vanished once in MDT. I then built a “clean” boot image with no additional drivers in it bar what is built into Windows PE and this time I had mouse and keyboard working right away. Yay, problem solved I thought.

Wrong. Once Windows was installed, my mouse and keyboard refused to work and I was unable to interact with Windows at all. Changing keyboards and ports did nothing. Eventually I used Remote Desktop to connect to the PC and looked at Device Manager. Sure enough, there were yellow bangs on most of the USB controller entries, so the actual ICH9 controller wasn’t fully installed by the drivers in Windows 10.

I found a zip on Intel’s site for their chipset/INF installer, dated early 2010’s, but the actual INF driver for the ICH9 USB controller dates from 2008. These installed perfectly on Windows 10 and enabled me to use the PC.

My next attempt saw me take these INF files and add them to MDT’s driver pool, then build new boot images using these drivers. Lo and behold, not only did Windows PE behave on boot, the finished Windows 10 install worked correctly as well.

What puzzles me is that with my first attempt, I already had ICH9 drivers in the boot image, but these dated from 2013. There must have been some sort of bug in these drivers during use in Windows PE, so I simply reverted to the older 2008 ICH9 USB drivers – it’s only 2 files in total that need to injected.

In the end, older hardware is going to have odd little quirks like this that eat up time and cause puzzlement while you try and figure out what the problem is. Of course, newer hardware isn’t exempt either – Intel’s I219-V network card has a bug that prevents PXE booting and not all motherboard manufacturers released UEFI updates to fix this issue as they probably didn’t deem it worth their time to do so.

Installing KB3000850 on Windows Server 2012 R2

I recently had cause to set up a new Windows Server 2012 R2 VM at work. As per usual for an operating system of this age, there were a lot of updates waiting once the server contacted WSUS. However, the process was a bit different to the past, mainly due to 2 huge updates to Windows Server 2012 R2 that either need a service stack update or the later huge update depends on the former to be installed.

It should be noted that my host server is 2012 R2 and that my VM is being served up by Hyper-V. The guest 2012 R2 VM is running as a Generation 2 VM and Secure Boot is enabled by default.

After the first round of updates, my server didn’t download any more updates. WSUS had expired the relevant service stack update that would enable the very important April 2014 update to install, which is needed for all 2012 R2 updates going forward. I installed a later servicing stack update manually, which then let the April 2014 and subsequent updates install – something like 155 of them. After that reboot, there were 5 patches left to go, one of them being KB3000850.

Unfortunately this is when the problems started. I would install the last batch of updates, only to have the server get to about 98 or 99% and then have the updates fail and then spend a lot of time reverting the updates. It was annoying and repeated attempts to install the patches kept failing. I left the server and went home, vowing to solve the issue the next day.

After viewing the Microsoft KB article on this patch, I suddenly recalled that I had the exact same problem on my existing VM’s a few years ago and that I had gotten around the problem in the end with an extremely easy, if time consuming trick. Simply shut down the VM, disable Secure Boot, install the patch and reboot, shut down the VM and re-enable Secure Boot. It takes a while, but eventually the patch installed cleanly and my server was finally up to date.

So far Microsoft’s Cumulative patching model seems to be working well enough to cut down on the number of individual patches going forward, but they haven’t yet ingested and added all the older patches into these Cumulative updates going back to the last baseline, which is the April 2014 mega patch. If they did this, the amount of patches being installed would drop dramatically and perhaps also increase patching speed. It would also certainly help clean out my WSUS installation!